JobTrack AI

Case Study

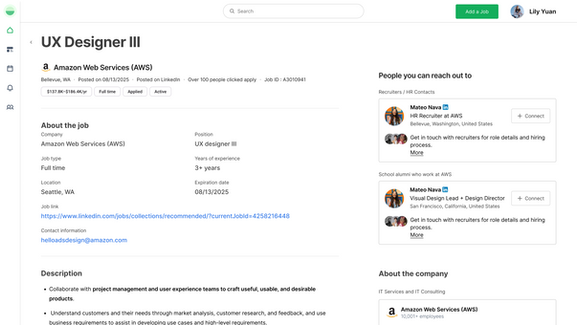

-From chaos to clarity: designing a smarter job search experience

Background

As a designer starting to re-enter the job market, I experienced firsthand how overwhelming and scattered the job search process can be. I was juggling multiple platforms — LinkedIn, Indeed, email threads, spreadsheets — and still struggling to keep track of deadlines, follow-ups, and resume versions.

When I spoke with friends and classmates, I realized it wasn’t just me. Everyone had the same frustrations: missed opportunities, duplicate applications, and a constant sense of uncertainty about where they stood in the process.

That’s when I saw an opportunity to create a smarter, simpler way to manage job applications — a tool that combines clean, intuitive design with AI-powered support, so job seekers can stay organized, focused, and confident.

Problem Statement

Job seekers often feel overwhelmed and disorganized during their job search, especially when applying to many roles across various platforms. They struggle to remember which jobs they applied for, what stage they’re at, or whether they followed up with recruiters.

This lack of visibility and structure can lead to missed opportunities, duplicate applications, or delayed responses—ultimately hurting their chances of landing a job.

Research

To deeply understand the challenges job seekers face, I used a mixed-methods research approach that combined surveys, competitor analysis, and in-depth interviews.

1. Survey

I started with a survey to gather broad insights on application habits and tools.

-

60% of respondents admitted they don’t track their applications at all.

-

Those who did relied on lightweight tools like Trello, Notion, or spreadsheets — but most abandoned them quickly.

-

Over 70% expressed openness to using AI if it could save time and reduce effort.

2. Competitor Analysis

Next, I analyzed six tools: Eztrackr, Huntr, Trello, Teal, Workmate, and JobCopilot.

Key takeaways included:

-

Existing tools are either too basic (just logging data) or too complex (overwhelming for non-technical users).

-

None provided actionable insights or smart automation paired with an intuitive, user-friendly interface.

3. User Interviews

I conducted six user interviews with a diverse group of job seekers: interns, designers, engineers, and researchers.

Key observations included:

-

Disorganized workflows with fragmented tracking across platforms

-

Anxiety around preparing for interviews and keeping follow-ups organized

-

A desire for clear guidance and human-controlled AI support rather than black-box automation.

Key Findings

From the survey, competitor analysis, and user interviews, four key insights emerged:

-

Fragmented tracking leads to missed opportunities

Users rely on scattered tools — LinkedIn bookmarks, email threads, notes apps — with no single source of truth. This causes missed follow-ups, duplicate applications, and disorganized job histories.

-

Simplicity drives adoption

Overly complex systems like Notion or custom spreadsheets often get abandoned. Users want a clean, visual interface, ideally with a Kanban-style board for clarity.

-

AI can boost efficiency — but must stay under user control

Participants liked the idea of AI automating repetitive tasks — such as parsing job details or drafting follow-ups — but wanted the ability to review, edit, and approve every action.

-

Users crave actionable guidance, not just data

They don’t just want to know their status. They want insights — who to reach out to, how to prepare for each interview stage, and reminders for next steps — to build confidence and momentum.

Target Audience, Personas & Journey Maps

Active job seekers—from recent graduates to mid-career professionals—who apply across multiple platforms (e.g., LinkedIn, Indeed) and want a simple, centralized way to track applications, avoid duplicates, and prepare for interviews with human-controlled AI support.

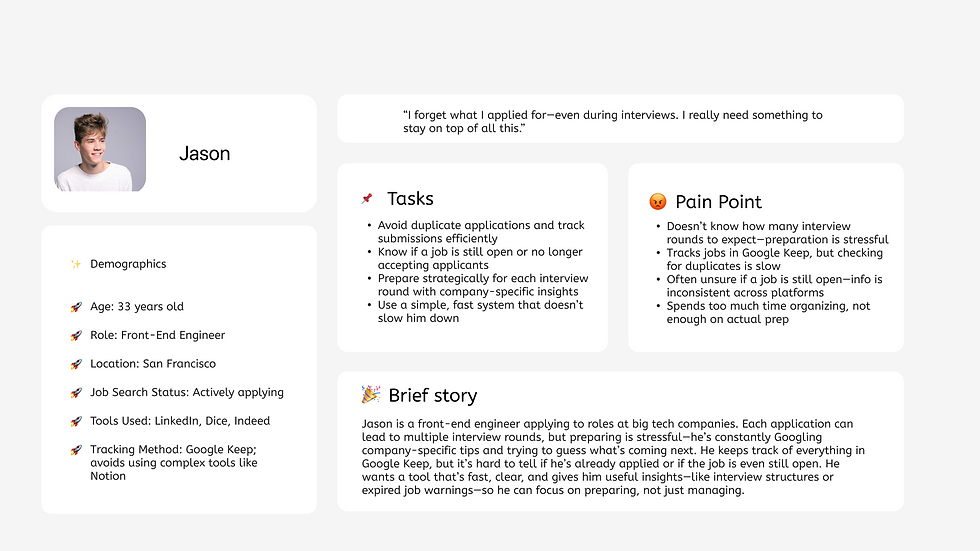

To better understand our target users, I created two personas — Jason and Emily — derived directly from interview insights and research data. Each persona represents a key segment of job seekers with distinct behaviors, pain points, and goals.

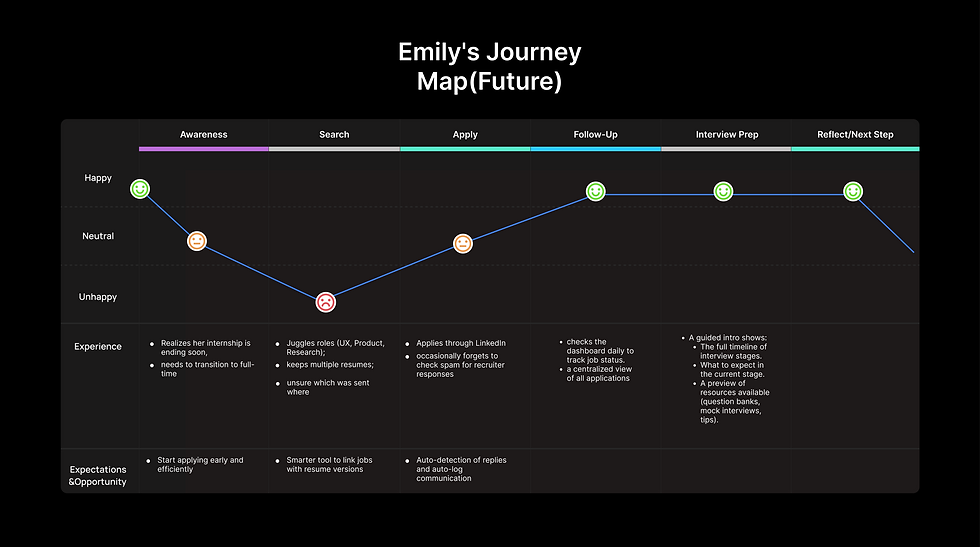

After defining these personas, I mapped their current and future journeys to visualize their experiences throughout the job search process. These journey maps were essential in identifying pain points and opportunities, helping me focus on the most critical areas during ideation and prototyping.

For this case study, I’ll walk through:

-

Jason’s journey as an experienced engineer balancing multiple applications while struggling with disorganization and lack of guidance.

-

Emily’s journey as an intern transitioning to full-time roles, dealing with scattered communications and resume confusion.

These maps not only clarified user needs but also served as a blueprint for design decisions — ensuring that every feature in the prototype was grounded in real user experiences.

Ideation & Prototypes

I explored flows and interface patterns through quick sketches and whiteboard sessions on paper and Figma. Early wireflows clarified the add-job title → add-job → track-status → interview-prep path, which informed the first clickable prototype.

The prototypes for this project were designed to be more than just static wireframes. They offered an interactive, end-to-end experience, allowing users to walk through every key step of the job search journey — from adding job titles and logging applications, to tracking progress across the Kanban board, and finally, preparing for interviews with AI-powered insights.

Usability Testing

To validate the prototype and uncover opportunities for improvement, I conducted usability testing sessions with a mix of target users, including designers, engineers, and recent graduates actively searching for jobs.

The testing focused on three key flows:

-

Adding job titles and adding a new job

-

Tracking job progress across the Kanban board

-

Preparing for interviews with AI-driven insights

Key Insights

-

Clear affordances matter: When buttons and actions behaved as users expected, interactions felt smooth and intuitive, reducing hesitation.

-

Visual cues enhance orientation: Icons, progress indicators, and labels helped users stay focused and complete tasks faster.

-

AI-powered prep stood out: Participants found the AI interview prep tool especially valuable, saying it reduced stress and saved time. However, some users missed the entry point, highlighting a need to improve visibility and guidance.

Final Thoughts

Designing JobTrack AI was more than just creating a job tracking tool — it was about understanding the emotional journey of job seekers and building a solution that feels simple, supportive, and smart.

This project taught me the importance of grounding every design decision in real user insights. From early research and user interviews to testing interactive prototypes, each step reinforced the need for a balance between AI-powered automation and human-centered control.

Looking ahead, I see opportunities to refine the experience by:

-

Enhancing feature discoverability with guided onboarding

-

Expanding AI-driven recommendations for interview preparation

-

Iterating based on continuous user feedback

Most importantly, this project reminded me that great design starts with empathy — and ends with empowering users to feel confident and in control of their journey.

Activity Timeline

Activity Timeline

Week 1 — July 10–16, 2025: Project scoping; competitor scan

Week 2 — July 17–23, 2025: Interview guide & screener ; initial interviews

Week 3 — July 24–30, 2025: Remaining interviews; analysis; initial personas & journey maps

Week 4 — July 31–Aug 6, 2025: Synthesis; core features; user flows

Week 5 — Aug 7–13, 2025: Wireframes & interaction states; low/mid-fi prototype; design system

Week 6 — Aug 14–20, 2025: Usability testing; insights & next steps

Week 7 — Aug 21–27, 2025: High-fidelity prototype

Week 8 — Aug 28–Sep 3, 2025: Iteration; final deck & case study

References

-

Lifeshack. How Many Applications Does It Take to Land a Job in 2024? https://www.lifeshack.com/resources/job-search/how-many-applications-does-it-take-to-find-a-job-in-2024

-

JobCopilot. Features Overview. https://www.jobcopilot.com/

-

Huntr. Track and Manage Job Applications. https://huntr.co/

Appendix

To explore the full process and additional documents, click “Read More.”